Testing OpenAI's Batch API for Classification

If you’ve used ChatGPT a bit too much you may have hit the rate limit where you can’t send another message for a couple of hours. Also if you had a large table of data such as a list of new products this would pose a potential problem where you have to wait for the limit to clear, ensure you weren’t remaking same requests, and could lead to complications in automation. Even when using the standard synchronous API there is a rate limit which if hit will limit the ability to make additional requests for some time. OpenAI details these limits at this link here. Handling large datasets and hitting rate limits can be frustrating and limit what you want to achieve but OpenAI have recently released a new API that can help manage both of these issues.

With the new Batch API you can upload a batch of queries and OpenAI will run your batched request at a later time, allowing you to retrieve your responses upon completion in the form of a JSON response once completed. The time to wait is at present up to 24 hours with the wait time typically much shorter.

This means you could put together hundreds of requests into one batched file, upload these requests to the server and just download them the next day at worst with all the responses returned and no worry about hitting a rate limit. An additional benefit is that the cost of these batched requests is 50% of the normal cost for a response. The details of the Batch API can be read on OpenAI here. Note there are still rate limits for the batch service but they are substantially higher than synchronous requests.

To test this new service I put together a basic UI where you can create a set system prompt, open a csv file, upload the details of this to the Batch API, request status updates for uploaded batches, and download completed responses in a JSON and CSV file.

I’ll detail how the process works and how it may be useful below:

The Batching Process

To use the Batch API you do need a system prompt which instructs the model as to how you want it to respond to the subsequent user prompt, much like when you use the API now or with Custom GPTs. There is no requirement for batched requests to have the same system prompt for each request but for the purposes of this exercise I have kept the system prompt the same for each batch but included the ability to adjust the system prompt for each batch within the UI. Adding a field in the csv for custom system prompts for each row would be simple enough if anyone was looking to do this.

For this test I have stuck with the prompt I used in my last article and will stick to UK classification for customs imports. This test is less about classification accuracy and more to test the capabilities of the Batch API itself.

To enable the batching of requests itself I will upload a csv file with each row being an individual request and the columns detailing information for the user prompt to be sent to the model.

The UI below is built using python and I put it together simply to show how the batching process works.

For the csv data itself I put together a few rows covering various household items and some basic descriptions to get a somewhat coherent response from the model.

Using the “Open csv file” button the csv is loaded into memory and then converted to the required JSON format the API requires. An example of the first row converted to the required JSON is below which you can see includes both the system prompt and the user prompt. The model to be used is also included, in this case gpt-4. You can use other models though which have their own costs and rate limits.

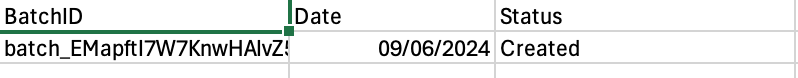

{"custom_id": "request-1", "method": "POST", "url": "/v1/chat/completions", "body": {"model": "gpt-4", "messages": [{"role": "system", "content": "You are the UK Customs Code Helper and are equipped with the ability to classify products based on a text description, material information and other details. This bot remains formal and professional, providing clear and concise 10-digit commodity codes for UK customs. The bot will present the code, followed by a well-reasoned explanation for its selection, helping users understand the classification. The bot maintains a formal tone, avoiding colloquial language and humor. The bot's priority remains accuracy and relevance in classifications, structured to first deliver the commodity code, then explain the reasoning behind it, aiding users in their understanding of UK customs procedures. It's important to note that all recommendations provided by this bot are based on available information and are intended as guidance only. Users are advised to conduct proper research and consult official sources or professionals before making any customs declaration decisions."}, {"role": "user", "content": "Description of goods: Socks, Material component: cotton, Other detail: ankle socks"}], "max_tokens": 1000}}With the JSON queries ready to be uploaded I can now select the Batch Classify button which creates the batch request to OpenAI and I save the Batch ID for this batch to a csv file with the status “Created”. You do get a lot more data back from the Batch API when you create a batch but to recall the status the BatchID is the critical data point.

Once created and you have a batch ID to check on, pressing the “Review Batches” button queries the current status and updates the csv file. Again you do need the Batch ID for these queries which is why I store this in a csv.

Despite the up to 24 hour wait suggested in the Batch API documentation I have found these small batches complete very quickly with this one done within a couple of minutes returning a status of ‘completed’.

With a completed response I can now retrieve the outputted file id which is also contained within the status response from the API. With this file ID I can make another call to the API and request the file which collates all the responses to my queries. The response from the Batch API is in JSON which I convert into two files in JSON and csv formats with the responses contained within. For example the first response was related to a query for socks and the output from the gpt-4 model was:

"The commodity code for your product would be 6115.96.9000.

This code is specifically for socks and other hosiery products that are made primarily of cotton. The mention of 'ankle socks' in your description consolidates this classification, as it confirms the product is sock-related. This category includes a wide variety of sock types, ensuring that your described good fits within the given code. Do note that this is based on the provided information and it's always a good idea to verify with official customs sources or professionals."

With that done the batch is complete and responses all saved in a file. No need to individually call each query and copy paste responses, and no worry of hitting any rate limits with the added bonus of a 50% discount.

What is the benefit of batching?

The real benefit that I can see here, apart from cost saving, is better management of processing large amounts of queries. Classification is a good example of where you might have significant numbers of queries at once or frequently and with this batching process you can create batches and easily upload them to the Batch API. This eliminates any worry of hitting rate limits you would see with synchronous requests and also allows you to create multiple batches for different jobs. Another benefit is the ability to just upload a large batch and leave OpenAI to do its work while you can do other things, retrieving it the next day.

Running your own batches

Testing this yourself is simple enough and it doesn’t need to be for UK customs classification. The script below is all written in python, the system prompt and user prompts are simple to adjust within the script. You will need your own API key to run this though which can be attained from Open AI within the links here.

import sys

from PyQt5.QtWidgets import QApplication, QWidget, QVBoxLayout, QPushButton, QTextEdit, QFileDialog, QLabel, QMessageBox

from PyQt5.QtGui import QFont

from openai import OpenAI

import os

import csv

import json

from datetime import datetime

# Set OpenAI API Key

os.environ['OPENAI_API_KEY'] = 'YOUR KEY HERE'

client = OpenAI()

class BatchClassifierApp(QWidget):

def __init__(self):

super().__init__()

self.initUI()

def initUI(self):

# Layout

layout = QVBoxLayout(self) # Set the layout to the main widget

# Text Box for Prompt

self.prompt_box = QTextEdit("""

You are the UK Customs Code Helper and are equipped with the ability to classify products

based on a text description, material information and other details. This bot remains formal and professional,

providing clear and concise 10-digit commodity codes for UK customs. The bot will present the code,

followed by a well-reasoned explanation for its selection, helping users understand the classification. The bot

maintains a formal tone, avoiding colloquial language and humor. The bot's priority remains accuracy and relevance

in classifications, structured to first deliver the commodity code, then explain the reasoning behind it, aiding

users in their understanding of UK customs procedures. It's important to note that all recommendations

provided by this bot are based on available information and are intended as guidance only. Users are advised to

conduct proper research and consult official sources or professionals before making any customs declaration decisions.""")

font = QFont() # Create a QFont object

font.setPointSize(14) # Set the font size

self.prompt_box.setFont(font) # Apply the font to the prompt box

self.prompt_box.setMinimumHeight(160) # Set minimum height for the text box

layout.addWidget(self.prompt_box)

# Button to Upload CSV File

self.upload_button = QPushButton('Open CSV File')

self.upload_button.clicked.connect(self.upload_csv)

layout.addWidget(self.upload_button)

# Label for showing selected file path

self.file_path_label = QLabel('No file selected')

layout.addWidget(self.file_path_label)

# Button to Trigger Batch Classification

self.classify_button = QPushButton('Batch Classify')

self.classify_button.clicked.connect(self.batch_classify)

layout.addWidget(self.classify_button)

# Button to Review Batches

self.review_button = QPushButton('Review Batches')

self.review_button.clicked.connect(self.review_batches)

layout.addWidget(self.review_button)

# Set the layout on the application's window

self.setLayout(layout)

self.setWindowTitle('Batch Classification System')

self.setGeometry(100, 100, 800, 300) # Set position and size of the window

def upload_csv(self):

# This function opens a file dialog to select a CSV file

file_path, _ = QFileDialog.getOpenFileName(self, "Open CSV", "", "CSV Files (*.csv)")

if file_path:

self.file_path_label.setText(f'Selected File: {file_path}')

data = self.read_csv(file_path)

self.prepare_json(data)

def read_csv(self, file_path):

# This function reads the data within the csv file and returns it

data = []

with open(file_path, mode='r', encoding='utf-8-sig') as file:

csv_reader = csv.DictReader(file)

for row in csv_reader:

if 'Description' in row and 'Material' in row and 'Other' in row:

data.append({

'Description': row['Description'],

'Material': row['Material'],

'Other': row['Other']

})

print(data)

return data

def prepare_json(self, data):

# This function prepares the JSON user input query

self.formatted_data = []

for row in data:

formatted_row = f"Description of goods: {row['Description']}, Material component: {row['Material']}, Other detail: {row['Other']}"

self.formatted_data.append(formatted_row)

self.output_to_jsonl()

def output_to_jsonl(self):

# This function saves the overall JSON query to Batch API

output_file = 'batchinput.jsonl'

with open(output_file, 'w') as f:

for i, content in enumerate(self.formatted_data, start=1):

entry = {

"custom_id": f"request-{i}",

"method": "POST",

"url": "/v1/chat/completions",

"body": {

"model": "gpt-4o",

"messages": [

{"role": "system", "content": self.prompt_box.toPlainText()},

{"role": "user", "content": content}

],

"max_tokens": 1000

}

}

f.write(json.dumps(entry) + '\n')

print(f"Output written to {output_file}")

self.jsonl_file_path = output_file # Save the output file path to an instance variable

def batch_classify(self):

# This function initiates the batch classification to the API

print("Batch Classification started...")

print(f"Using prompt: {self.prompt_box.toPlainText()}")

if not hasattr(self, 'jsonl_file_path'):

print("No data to classify.")

return

batch_input_file = client.files.create(

file=open(self.jsonl_file_path, "rb"),

purpose="batch"

)

batch_input_file_id = batch_input_file.id

batch_response = client.batches.create(

input_file_id=batch_input_file_id,

endpoint="/v1/chat/completions",

completion_window="24h",

metadata={

"description": "nightly eval job"

}

)

print(f"Batch created with ID: {batch_response.id}")

print(batch_response)

# Append batch ID and other details to the CSV file

self.append_batch_id_to_csv(

batch_response.id,

)

def append_batch_id_to_csv(self, batch_id):

# This file creates and/or appends the batch id to the batch run file which logs all the batches run

csv_file = 'batchRun.csv'

file_exists = os.path.isfile(csv_file)

with open(csv_file, mode='a', newline='') as file:

fieldnames = ['BatchID', 'Date', 'Status']

writer = csv.DictWriter(file, fieldnames=fieldnames)

if not file_exists:

writer.writeheader()

today_date = datetime.today().strftime('%Y-%m-%d')

writer.writerow({

'BatchID': batch_id,

'Date': today_date,

'Status': "Created",

})

print(f"Batch ID {batch_id} appended to {csv_file}")

def review_batches(self):

# This function reviews all the batches in the batchRun csv file and if completed downloads the completed responses

csv_file = 'batchRun.csv'

if not os.path.isfile(csv_file):

print("batchRun.csv does not exist.")

return

batches = []

with open(csv_file, mode='r', newline='') as file:

reader = csv.DictReader(file)

batches = [row for row in reader]

if not batches:

print("batchRun.csv is empty.")

return

for batch in batches:

try:

batch_id = batch['BatchID']

response = client.batches.retrieve(batch_id)

print(response)

batch['Status'] = response.status

print(batch['Status'])

outputFileID = response.output_file_id

if response.status == 'completed':

content = client.files.content(outputFileID)

binary_data = content.read() # Read the binary data from the response

# Decode the binary data to a string

decoded_data = binary_data.decode('utf-8')

print(decoded_data)

try:

# Assuming each JSON object is separated by a newline

json_objects = [json.loads(line) for line in decoded_data.splitlines() if line.strip()]

# Now you can work with 'json_objects' as a list of dictionaries

print(content)

csv_file_path = f"{outputFileID}.csv"

with open(csv_file_path, 'w', newline='') as csvfile:

fieldnames = ['content', 'custom_id']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader()

for obj in json_objects:

# Navigate through the nested structure to find 'content'

content = obj.get('response', {}).get('body', {}).get('choices', [{}])[0].get('message', {}).get('content', '')

custom_id = obj.get('custom_id', '') # Extract 'custom_id' directly from the top-level object

row = {

'content': content,

'custom_id': custom_id

}

writer.writerow(row)

print(f"Output CSV file {outputFileID}.csv saved.")

# Write each JSON object to a file in JSON Lines format

with open(f"{outputFileID}.jsonl", 'w') as f: # Change 'wb' to 'w' as we're writing text, not binary data

for obj in json_objects:

f.write(json.dumps(obj) + "\n") # Serialize each dictionary to JSON and write it with a newline

print(f"Output file {outputFileID}.jsonl saved.")

except json.JSONDecodeError as e:

print("Failed to decode JSON:", e)

except Exception as e:

print("An error occurred:", e)

except Exception as e:

batch['Status'] = f"Error: {str(e)}"

with open(csv_file, mode='w', newline='') as file:

fieldnames = ['BatchID', 'Date', 'Status']

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

writer.writerows(batches)

print(f"Batch statuses updated in {csv_file}")

def api_call(self, message_content):

# This is a redundant function I left in for testing purposes

model = "gpt-4o"

try:

response = client.chat.completions.create(

model=model,

messages=[

{"role": "system", "content": self.prompt_box.toPlainText()},

{"role": "user", "content": message_content}

]

)

generated_text = response.choices[0].message.content.strip()

except Exception as e:

generated_text = f"Error: {str(e)}"

return generated_text

if __name__ == '__main__':

app = QApplication(sys.argv)

ex = BatchClassifierApp()

ex.show()

sys.exit(app.exec_())